Are We Learning From Evaluations? – Leadership 360 – Education Week

In a recent article from Jill Berkowicz and Ann Meyers,

Are We Learning From Evaluations? – Leadership 360 – Education Week.

they make a case for fallacies that stand behind new evaluation systems that use scoring mechanisms for reporting “what isn’t being done well enough.”

I applaud them for their insight into this nutty problem of evaluation that has haunted us for decades. I grew up in administration in Washington State where new evaluation systems continue to be debated while an instrument first crafted in the 1970’s is in continued use. Changing educational practice is never easy!

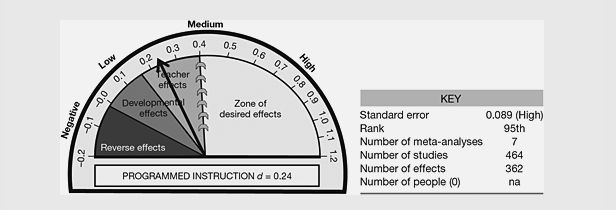

But, Jill and Ann make the case that we should treat teacher evaluation on the same standard as student feedback, which is cornerstone to our current understanding of achievement and motivation. Hattie (2008) has generally confirmed this precept. As I read their treatise, I must admit that I experienced some tension over devaluing a professional appraisal process to the equivalent of providing effective feedback to a 6th grade writer. Don’t misunderstand, I agree that giving a 6th grader a single grade on a written paper is unlikely to motivate them and does little in helping them learn how to become better writers. I believe that the authors are making this argument quite effectively.

But, the evaluation system of teacher serves two purposes. At it’s core, it does serve the purpose of informing improved practice. The author’s quote here is quite profound:

In 1975 a Handbook for Faculty Development was published for the Council for the Advancement of Small Colleges. In it they list 13 characteristics that certainly apply. Feedback needs to be descriptive rather than evaluative. Sound familiar? In the new teacher and principal evaluation systems, the requirement to provide ‘evidence’ is exactly that. No interpretation, no value judgment, simply what was seen. Included in the 13 is also that feedback be specific, can be responded to, is well timed, is the right amount (too much can’t be addressed at once), includes information sharing rather than telling, is solicited (or welcomed as helpful) and plays a role in the development of trust, honesty, and a genuine concern.

I do find that our use of a framework of standards (Danielson, 2011) allows us to gather evidence and then align it to a set of descriptors that turns the evidence into feedback. This feedback includes a score — and rightfully so! Rubrics were written with the expressed purpose of quantifying the feedback and setting targets for performance in the form of exemplars associated with higher rubric scores.

This is where the authors fall short. They forget that, without goals, exemplars, and appropriate targets, they are missing the true goal of effective feedback, to accomplish greater achievement – whether for students or as a professional. To that end, we have to consider how the evidence we collect is utilized to provide for accountability. Evaluators have to be able, through the noted relationships that must be in place, to have both facilitative and instructional conversations that drive improved practice and the development of expertise. Somebody in that conversation has to make a value judgement for that process to be something more than just spinning wheels on the slippery slope of mediocrity.

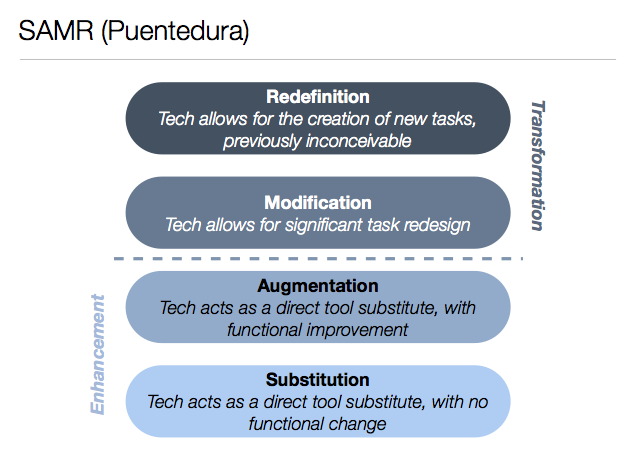

We then proceeded to the PTO meeting and greeted faces both new and returning in a packed room. We shared our vision for the year and discussed how we find “gravity” together and keep our connection to core values while we take the next logical steps in our strategic plan. At our core, we have some broad goals for students and these are linked to our Outcomes that were established during our strategic planning process just over a year ago. Here they are for your review:

We then proceeded to the PTO meeting and greeted faces both new and returning in a packed room. We shared our vision for the year and discussed how we find “gravity” together and keep our connection to core values while we take the next logical steps in our strategic plan. At our core, we have some broad goals for students and these are linked to our Outcomes that were established during our strategic planning process just over a year ago. Here they are for your review: