ECIS Panel Discussion – April, 2012

Initial thoughts:

The initial question posited to the conference panel that I’ve been asked to address:

Information Technology in school – Does it improve learning?

Gathered some resources to begin to address this question and related topics:

https://www.evernote.com/pub/chinazurfluh/technologyitems

The key issue associated with answering the question revolves first around how you define improving learning. The learning targets that are currently accepted often revolve around norm referenced test scores because of our reliance on these measures to show growth or performance against a larger data set. There is some validity to this because of the large data set available after decades of using these measures and the large body of experience with these measures.

However, these kinds of measures are ill prepared to measure 21st century skills. They effectively measure math, reading, writing, and core knowledge competency, but they do little to measure attitudes, intellectual processing skills, and skills revolving around independence, collaboration, and innovation. We have scores of examples of students who are truly gifted as leaders and complex thinkers that routinely scored below average on the accepted measures.

So, if you are asking me whether information technology improves learning, I would have to answer “No”.

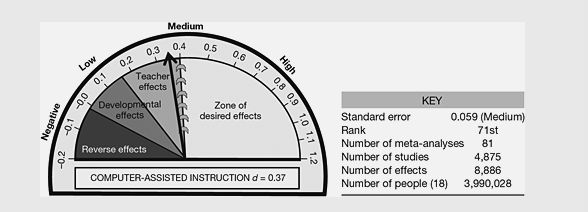

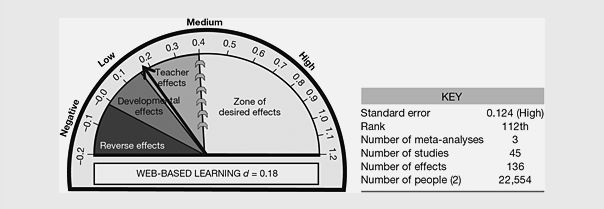

There is no clear empirical evidence that information technology as an independent variable has a correlation to improved student learning as a dependent variable in the traditional, measured definition of the term.

I would suggest that addressing this question from a quantitative point of view is faulty at the outset. This is the same logic that has led to American ignorance of the impact of poverty on education and learning. We’ve spent more than a decade comparing our results to international measures only to ignore how poverty has impacted our bottom line. A recent AASA blog entry highlights the fallacy of the standards movement to address educational reform while ignoring this poverty gap between the countries (e.g. Finland with 4% in poverty vs. U.S. at 21%). Quantitative measures are insufficient in addressing complex issues.

Logic confirms that If we want to address what technology enables, we need different goals for education. In the truest tradition of backward design, it begins with this question:

What world are we preparing kids to live in?

Addressing that question and looking at essential skills for a 21st century world is where we truly should be focused. In regards to this question, the next logical qualified questions is:

Does the use of information technology in schools prepare kids for a technology rich world we can scarcely describe in the current moment?

Then the answer would be a resounding and passionate — YES!! Now let’s design and build measures for addressing skills that emerge from this backward design and use measures that are meant to really test whether students are developing 21st century skills. Let’s get beyond the issue of technology as an entity and look at how we create technology rich environments that eminently prepare students for the world of their future.

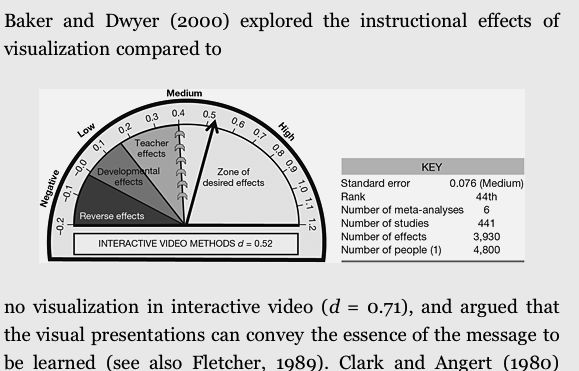

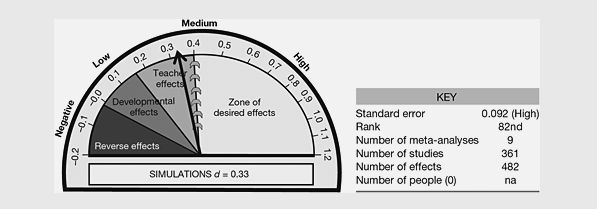

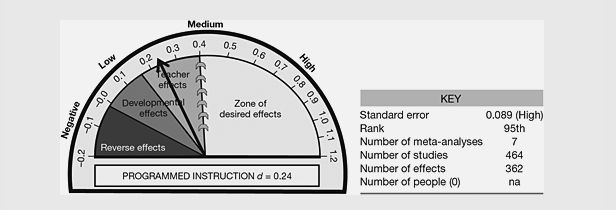

Hattie research:

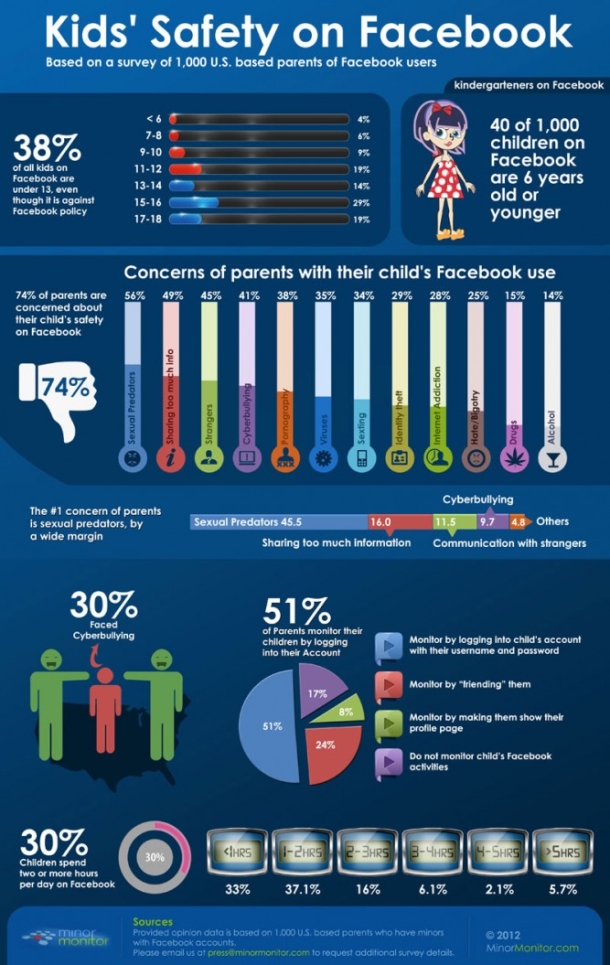

and one recently reported danger from CNET: